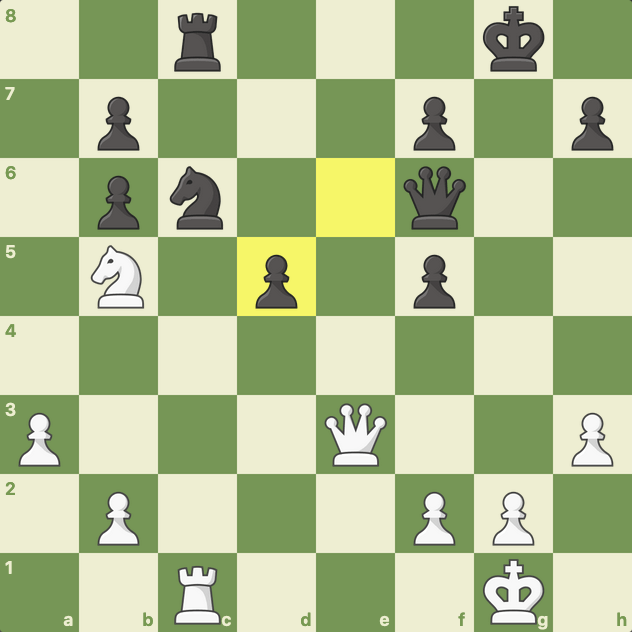

The hardware to make them wasn't practical in the 1990s, when Deep Blue was built, Campbell said. The learning happened via neural networks, or programs that operate much like the neurons in a human brain. AlphaGo played many board games against itself and used those patterns to learn optimal strategies. The artificially intelligent computer program called AlphaGo, for example, which beat the world's champion player of the board game Go, also works differently from Deep Blue. Machine learning systems that have been developed in the past two decades also make use of huge amounts of data that simply didn't exist in 1997, when the internet was still in its infancy. Watson proved that it could understand and respond to humans by defeating longtime "Jeopardy!" champions in 2011. IBM's next intelligent machine, named Watson, for example, works very differently from Deep Blue, operating more like a search engine. Learning machinesĪt the time Deep Blue was built, the field of machine learning hadn't progressed as far as it has now, and much of the computing power wasn't available yet, Campbell said. Building a machine that can tackle different tasks, or that can learn how to do new ones, has proved more difficult, he added. IBM scientists spent years constructing Deep Blue, and all it could do was play chess, Campbell said.

"You can look at it the human way, using experience and intuition, or in a more computer-like way." Those methods complement each other, he said.Īlthough Deep Blue's win proved that humans could build a machine that's a great chess player, it underscored the complexity and difficulty of building a computer that could handle a board game.

"The more interesting thing we showed was that there's more than one way to look at a complex problem," Campbell told Live Science. "Some doubted that a computer would ever play as well as a top human. "Good as they are, are quite poor at other kinds of decision making," said Murray Campbell, a research scientist at IBM Research.

0 kommentar(er)

0 kommentar(er)